Project Ideation:

I came into this project with four potential ideas. I was interested in exploring ideas of post-mortem digital legacies and agency/curation of digital legacies. My first idea revolved around aggressive web-scraping and data-scraping the web for every piece of info on myself and creating a digital face/avatar. A larger than life statue almost. This idea really didn't fit the interactive data requirements that the project required however, after some careful consideration I decided against trying to mold the project to fit the requirements tabling it for the future instead.

My second idea explored similar ideas and would have been an interactive digital showroom and auction with an NFT with a legally binding will for my digital legacies / authorship etc. I'm a noob in NFT and the law and after talking with some friends who operate in the NFT space/work as lawyers I wasn't confident in my ability to do things the right way in the time frame. Also, once again the multiplayer 3D showroom aspect was more so born from the project requirements vs actually making sense for the project.

My third idea was to continue working on my project 1, a WebGL Audio Visualizer. This time however I would work on connecting it the the Spotify Web SDK and create a Spotify Web Player with my visualizer as the front-end using React-Three-Fiber.

My fourth and final idea was to create a 3D React-Three-Fiber multiplayer escape room with WebRTC. This game had always been a dream of mine but seemed too big or time consuming to do well.

My initial approach was to do both projects 3 and 4 in tandem, I believed that the Spotify Visualizer could be finished in a weekend and that I could then focus on giving the escape room my all without pressure to finish.

Process:

I first started on the Spotify Web SDK and very quickly managed to create a working Spotify Web Player that I could connect to and play music from. I then began moving my Three.js code into React-three-fiber reformatting and learning on the fly. The reason I chose React-Three Fiber was because the majority of tutorials online with Spotify involve React and I figured it would be a good way to familiarize myself with the library.

After making some progress in R3F I went back to the Spotify SDK and tried to analyze my first song... I very quickly realized that things wouldn't be so simple. Spotify doesn't allow any access whatsoever to the audio nodes which meant my initial algorithms and code wouldn't work 1 to 1. Spotify instead offers audio analysis as part of their SDK, they return a very detailed data object filled with information about the timbres the bars the sections etc. This ended up being a downside as the data objects were massive and Spotify doesn't allow multiple requests at the same time when it comes to audio analysis. Already data and timing issues were becoming apparent, just requesting these large objects could cause issues and lag when it comes to visualizing with the data. Secondly, the analysis isn't mapped to the current time of the Playing song but rather to the timestamp which meant I would have to take the current time of the song in ms convert to seconds and then map that to the data object's data based on the current time, obviously this presents us with further lag issues as we'd play a song, request data, wait for data to load, get current song ms, calculate ms to seconds, find frequency and amplitude of that second, visualize it then repeat for every ms before every ms is over...not to mention, if you switch songs before a song is over the SDK can go berserk and require a hard refresh because we're still requesting data. Before we get to mapping before we get to visualizing just getting the current time accurately became a massive issue because we had to make a request every second or so get current ms which quickly led to 429 errors aka data rate limiting. I turned to the world wide web to see how other developers were dealing with it and I found literally only 2 visualizers out there, both using very hacky methods that led to less than ideal user experiences. It then dawned on me that this was not a problem people had fixed but instead caused people to quit, I was in a lot of trouble. After conversing with a senior dev for insight he straight up told me to try a different project because of my timeframe, the problems I was dealing with don't have built in solutions but instead would require lots of hacking and problem solving / r&d.

With a full weekend gone to waste I began frantically trying to work on my escape room game. I began first by working on WebRTC w/React to create a working video call w/React. I wasted a bit of time thinking I could actually make a multiplayer game with a variable number of players and messed around with mesh networks and GunDB before realizing I was kind of already screwed on time. I settled for a janky ish 2 person call and then moved into React-Three-Fiber. I started building out rooms with sketch fab 3D models and the GLTFSJX library as well as designing puzzles. I consumed a lot of escape room content and did a lot of reading on puzzle building and spent a lot of time on figma designing potential rooms/items/puzzles before developing which led to lots of issues as my ability to ideate puzzles was far greater my ability to actually implement the puzzles. I ended up having to start with the basics and just worked on conveying information onclick for each item. I then worked on using conditional rendering methods to assign player numbers and render separate rooms for each player. I lost some time getting routes to work before realizing that I couldn't use React-Router the way I intended as I needed the P2P connection to remain connected and hence I couldn't redirect to individual endpoints in the URL. After many many many many difficulties that I will talk about later, I ended up settling on the current MVP I have which is less of a game and more of a proof of concept for the technologies.

Obstacles

One of the main attractions that R3F came with was built in onClick and onHover raycasters that in theory work like event listeners and handle all the obnoxious raycasting that I would have to deal with...for whatever reason, I still haven't fully diagnosed the situation, but my raycasters were going haywire. In isolation they seemed fine and accurate but when I put multiple objects together they would start interacting in funny ways, invisible geometries and meshes EVERYWHERE. I actually managed to get a hold of the lead developer behind R3F and had him take a look at my code pen hoping that since he's basically a wizard he'd be able to fix things quickly. Bad news bears he told me there were probably 1 million and 1 reasons that it might be occurring. The main issue was that I was getting my resources from sketchfab and had no control of the actual build and construction of the geometries. Every 3D model on sketchfab gets auto-converted to GLTF and then I take that GLTF and convert it to a useable JSX component, that's two levels of abstraction on top of not making the models myself, there could be plenty of floating invisible geometries z-fighting all over the place! After many many many hours of debugging I found a somewhat workable solution, I could use an onHover function to setState a state which would conditionally be checked and used to trigger an alert function. This would only work after parsing each segment of the GLTF geometry and running meshBounds on the raycast. If this doesn't make much sense to you it doesn't make sense to me either. The documentation for meshBounds is literally useless. It just says it's worse for accuracy and better for performance. That's literally all that's written about it. I don't know what changes or how but it works. Actually, logically it doesn't even make sense as I tried to maybe instead of use onHover use onClick assuming that I could cut out the middle man since I was using the same parsing and meshBounds but alas it doesn't work with onClick even though onHover and onClick should be using the same raycaster...I don't know, it seems convoluted to listen for hovers then setState then use that state to trigger a conditional instead of having an onClick trigger the alert but it doesn't work so...yeah. Even with this new method I was running into issues as the Orbit Controls would go wild, when I trigger the alert this way and click out of the alert it seems like my cursor would be removed from the dom temporarily and placed back in where I exit the alert, my camera would then perceive a cursor teleportation essentially and whip it self through the walls in a circle trying to re-center around my new cursor position. This obviously couldn't work so I explored pointer-lock controls which somewhat worked except it removed the center cursor and changed the way event listeners worked which meant all my onclick onhover work would be wasted. After a while of hacking things together I came to a version of first-person controls with x-y-z axis movement locked so that users could at least move the camera around and click stuff. It wasn't ideal that I wouldn't have actual 3D traversal movement but it allowed me to skip adding Cannon.js physics to the game a step I was dreading. With the amount of z-fighting and invis geometry issues I was having already not to mention the FPS performance issues I was beginning to run into I knew that a physics engine would kill the experience. For a temporary fix this would suffice.

Code:

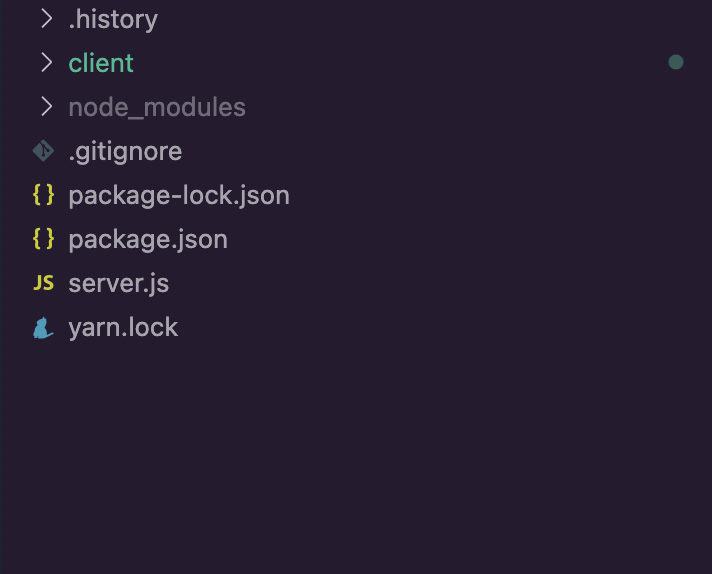

Link to Github: https://github.com/fakebrianho/escapeRoomv1