Ideation:

I wanted to play with some face tracking and potentially some gesture tracking, perhaps revisit some old NoC and Kinetic interfaces work except instead of setting up a defined space to projection map and using a kinect using just AI based tracking.

I've been working with shaders a lot lately and messing with space and particles a lot still so the first conceptual idea that came to me was to create a mini-universe where your face is the center of the universe. Your face would be tracked and the 'star' would follow your face, based on whether your hand is open or closed particles would be either attracted to you or randomly floating through space. \

Tech Stack:

- Three.js > P5.js

- Tensorflow.

- Handtrack.js

- GLSL

As usual I chose three.js over p5 and I decided on using handtrack.js since it works so easily out of the box and would be easier to adapt to three.js vs reverse engineering the p5 based examples given.

Process:

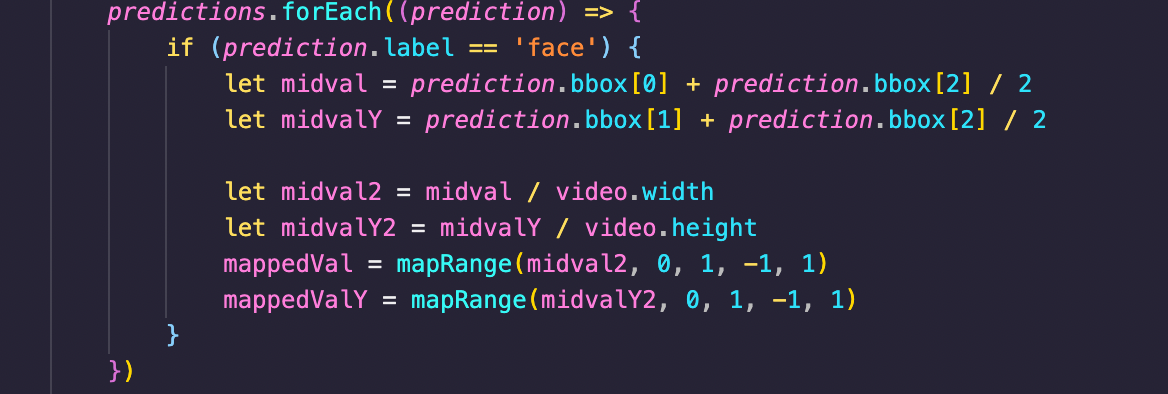

Okay I have to admit... I'm a bit rusty with Nature of Code and vectors haha. Sorry Moon! >_< Most of the math in GLSL and shaders that I work with is faked! I tend to stay away from physics whenever possible! I started with getting Handtrack.js working, luckily they have simple pong demo where they show how to use the bounding box of the face to move an element. In the example they only move on the x-axis but I was able to figure out how to get the y-value as well as fix the aspect ratio.

This actually wasn't as easy as it sounds, it took a lot of trial and error because the x and y values aren't actually documented clearly, you just get an array of numbers, the face and hands are individual objects either, they're just in a predictions array and you have to dig in the object to isolate the labels and conditionally write logic based off that.

I then wrote out some three.js logic where a mesh moves in the x,y like your cursor. It took a bit of digging through my old code and stack overflow to find the logic, it was something I'd done before but don't do often. Turns out the trick is to create a vector3 out of your pointer.x and pointer.y and then subtract the camera position and normalize. From there you unproject the camera to get the NDC space, (at this point I'm not 100% sure what I'm saying is accurate but I know you get the NDC space and convert to world space one way or another), the code is sound, my explanation may not be. You clone the resulting vectors after multiplying by distance and then you can set your mesh's position to that, or lerp to it.

From there I commented everything I did out of the pointermove event listener and moved it into my render loop and instead of using pointer.x and pointer.y I use my handtrack.js data.

Next step was to add the attraction, I based my logic fully off of Dan Schiffman's example that he made in his NoC Attraction example, I modified the code to reflect three.js functions instead of the built in p5 vector functions and also adjusted for 3 dimensions. I ended up mixing the logic for slightly simpler logic found in a three.js attractor codepen I found, will link below. This took me a while to get right because my acceleration was going nuts for a while because I was forgetting to clamp it down / reset it. (Thanks to Alice for helping me bugfix and catching some of these mistakes). The scale of my camera had me messed up for a while as well, with my camera set to (0, 0, -2) and my attractor with a size of 1 by the time the velocity reset the movers were still flying off the screen, after I moved the camera back to 100 things looked much better. My logic was a bit funky because my attractor wasn't an object but simply a mesh but my movers were, I created a global attract function to make the both work together. Kind of janky to be honest. After I got the attraction working I set it to only be called when a hand is being tracked and when the hand that is tracked is closed.

I then spent waaaaaaaaaaaaaay too long trying to make things look good, it still looks visually pretty awful unfortunately. I don't know what I did in my project setup with parcel but this is why I should have just used my boiler plates. I truly don't know what was wrong but I threw 2-3 hours down the garbage trying to get textures working. I haven't a clue why they didn't work and also don't have a clue how I fixed it. I was trying to follow Jaume Sanchez Elias' tutorial on fluffy fire noise, linked below in my references/attributions. It's a 6-7 year old tutorial so much of the code was deprecated, the main thing I managed to glean from him was how to us a spliced png of an explosion as a texture to reference vertically. The colors were taken directly from the png and applied like ambient occlusion so that it's brighter on when farther from the center of the sphere.

My final result looked uh, not quite what I wanted but would suffice for the time being, I then decided to waste more of my life trying to get more textures in my sketch and a cube texture map to get a space environment map. After much pain and sorrow I got a the environment map working but to my dismay it looks like garbage because I can't move it. If I rotate the scene or elements around 0, 0, 0 things get super wonky because I'm only moving the attractor on the x, y axis'. Handtrack.js and in general no matter what openCV or AI library I don't know if there is sufficiently reliable depth tracking available so I can't move the mesh in the z-position. Perhaps with some research I could figure out how to rotate just the cube map but for now it is what it is.

I decided to procrastinate on my thesis so I revisited my sun shader and looked up some tutorials from the wonderful Yuri Artiukh and followed his lead on combing perlin noise in octaves to create a better looking sun. Super cool! I was familiar with perlin noise and fractal brownian motion but I had never rendered a texture onto a cube to then re-reference as texture in another shader. This was super new territory for me unlocks lots of performance boosts and creative opportunities for me. I then moved my new sun shader object into my main project and replaced the original mesh with the sun. Luckily since I had a global attract function I didn't need to integrate the function into my new 'attractor'. Lastly I replaced my mover geometries and materials with the original sun shader with some color modifications and we have a semi-working project!

Future Steps:

Clean up my code, it's mega spaghetti at the moment, hundreds of lines commented out, I could and should make everything object oriented and I should actually de-object my movers. The current logic utilized is very p5, create an object and make a bunch of them via a forloop. Call some kind of update, applyForce every frame in the draw loop etc. This is super bad in three.js and really really inefficient. I should either use an instanced mesh or with particles if I want thousands or millions switch to GPGPU and float32bufferarrays where every vertex is another particle and instead of hundreds of meshes being updated I'm just updating and moving vertices around in a single buffer object. On paper I understand how to do all this and have done some form of it in my past work but frankly, it will take waaay too much time for me to implement correctly now and in timely manner for this assignment.

Live Site:

https://bho.media/gestureFaceTrack/

Github:

https://github.com/fakebrianho/machineLearning_handtrack_001

References / Attribution:

Juame Sanchez Elias: Original Sun Shader -> Mover shaders

https://www.clicktorelease.com/blog/vertex-displacement-noise-3d-webgl-glsl-three-js/

Yuri Artiukh: Sun Shader

https://www.youtube.com/user/flintyara

Ian McEwan, Ashima Arts: The definitive Webgl 4D noise that everyone and their mothers use.

https://github.com/ashima/webgl-noise/blob/master/src/noise3D.glsl

Handtrack.js - Pong Demo Code

https://github.com/victordibia/handtrack.js/